Couple of years back, I worked on a project with one of my client and friend to replace SCOM monitoring with Log Analytics based alerting system. This was an exiciting project considering the fact that we were designing a solution end-to-end but for me the best part was PowerShell runbook running from Azure/ Hybrid Worker (On-Premise). This meant that I don’t need to have a Windows machine anywhere to run PowerShell and I can automate things with the same good old PowerShell. This functionality can be crucial for even newer solutions like Entra ID Lifecycle workflow custom extensions to do a number of things.

In this blog post (Yeah I know I am writing after a long time), I would try to go through end-to-end solution for creating an alert and very customized notification email. Even if you do not go ITSM connector way, with the customized email, you can drop that email to a specific mailbox address which can trigger ServiceNow workflow with relevant details to create a ticket in system. One can understand that how this capability can serve for many other use cases as well.

Step 1: Get an Azure subscription and some credits. Automation account would not cost much for the runbook runs but active subscription is a must otherwise it would not allow you to create one but the credits are required importantly for the log ingestion to a Log analytics workspace.

Step 2: Create a log analytics workspace by any name along with resource group, in which it would be created. Chose a suitable location for performance and cost reasons. Pricing can be Pay as go.

Step 3: Create an automation account along with relevant resource group, where we would create runbooks later on.

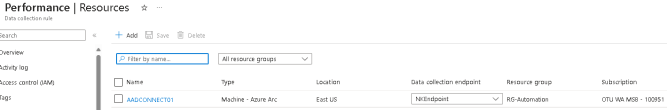

Step 4: Onboard the machines from where you need to ingest the logs. For the same, the way been to download Log analytics agents and then configure the same by putting workspace ID and key but post August 2024, the way forward would be Azure Monitor agent. For the same, you would need to onboard the server/ machine to Azure Arc and then add Azure monitor agent as extension there. Once the same is onboarded then you need to configure that which logs would be pushed to workspace, which you can do with Data collection rules.

Step 5: Once you got the logs reaching to Log Analytics workspace, now is the time to setup alerts and runbook to send email on the alert. Let’s see how to go with that.

Search for alerts in Azure and then click on Alert rules. In Alert rules, click create and then chose relevant Log analytics workspace, then on next, under condition, chose signal as Custom log search and then put the kusto query for the specific condition you want to evaluate. A sample one is below:

SecurityEvent

| where EventID == 4624 and (LogonType == 10 or LogonType == 2)

| project TimeGenerated, Account, AccountType, Computer, LogonType

Next is to setup frequency and threshold on the same page and on next page, there is option for action group, which we can come back later to, next page in details, you can chose priority of the alert and identity (can be left to default). This should be enough to create the alert.

You can come back to create Action group once we are ready with runbook and then the same can be updated to the alert rule.

Step 6: For credentials, we need to create a service principal. Though as bare minimum, we need mail.send permissions, but I would put the data.read permissions as well for reading the log analytics query results as well.

We would go to App registrations and then create a new one, give a name. Once created then would note down tenant ID, Application ID and then also would create a secret and note down the value of the same. Next, we need to allow permissions so under permissions, app mail.read application permissions so that it can send email as any user (for secure config, it can be deleted or target to specific account) and then from add permissions, API my organization uses, you pick Log analytics API and chose data.read permission to be added. Then you need to given admin consent so that your identity is ready. Tenant ID would be used in Runbook script while Application ID and Secret would go to credentials.

For creating credentials, you go to Automation accounts, select the account you created and then under shared resources, go to credentials and add one by name GA as I have referred in the script (can chose any other name but would need to update script) and then for username, put application ID and for password, put secret value and your identity is done.

Step 7: Go to Automation accounts, open the automation account, which you created in step 3 and then go to runbooks. Create a runbook, give a name, chose PowerShell, select version 5 and create. Once created open the same and click edit and then can put the code like below:

param (

[parameter (mandatory=$false)][Object]$webhookData,

[parameter (mandatory=$false)][string]$tenantID = "xxxxxxxxxxx",

[parameter (mandatory=$false)][string]$mailSender = "xxxxxxxxxxx@xxxxxxxxxx.onmicrosoft.com",

[parameter (mandatory=$false)][string]$recipients = "nitish@nitishkumar.net"

)

# Replace the name of Automation Credential variable

$credential = (Get-AutomationPSCredential -Name "xxxxx")

# Function to get log query results from the API url

function Get-QueryResults {

param (

[parameter (mandatory=$false)][string]$queryUrl,

[parameter (mandatory=$false)][pscredential]$credential,

[parameter (mandatory=$false)][string]$tenantID

)

# AZ module is loaded by default

Connect-AzAccount -ServicePrincipal -Credential $credential -Tenant $tenantID

$token = (Get-AzAccessToken -ResourceUrl "https://api.loganalytics.io").Token

$logQueryHeaders = @{

Authorization = "Bearer $token"

}

$resultsTable = invoke-RestMethod -Method Get "$queryUrl" -Headers $logQueryHeaders

$count = 0

# Note down the number of row in query results

foreach ($table in $resultsTable.Tables) {

$count += $table.Rows.Count

}

# create an object of the size

$results = New-Object object[] $count

# Fill the table object

$i = 0;

foreach ($table in $resultsTable.Tables) {

foreach ($row in $table.Rows) {

$properties = @{}

for ($columnNum=0; $columnNum -lt $table.Columns.Count; $columnNum++) {

$properties[$table.Columns[$columnNum].name] = $row[$columnNum]

}

$results[$i] = (New-Object PSObject -Property $properties)

$null = $i++

}

}

return $results

}

# Function to send custom email

function Send-CustomMail {

param (

[parameter (mandatory=$false)][string]$mailSender,

[parameter (mandatory=$false)][string]$recipients,

[parameter (mandatory=$false)][string]$subject,

[parameter (mandatory=$false)][string]$mailbody,

[parameter (mandatory=$false)][pscredential]$credential,

[parameter (mandatory=$false)][string]$tenantID

)

$clientID = $credential.UserName

$clientSecret = $credential.GetNetworkCredential().Password

#Connect to Graph API

$tokenBody = @{

Grant_Type = "client_credentials"

Scope = "https://graph.microsoft.com/.default"

Client_Id = $clientID

Client_Secret = $clientSecret

}

$tokenResponse = Invoke-RestMethod -Uri "https://login.microsoftonline.com/$tenantID/oauth2/v2.0/token" -Method POST -Body $tokenBody

$headers = @{

"Authorization" = "Bearer $($tokenResponse.access_token)"

"Content-type" = "application/json"

}

#Send email

$URLsend = "https://graph.microsoft.com/v1.0/users/$mailSender/sendMail"

$BodyJsonsend = @{

"Message" = @{

"Subject" = "$subject"

"Body" = @{

"ContentType" = "Html"

"Content" = "$mailbody"

}

"ToRecipients" = @(

@{

"EmailAddress" = @{

"Address" = "$recipients"

}

}

)

}

"SaveToSentItems" = "false"

} | ConvertTo-Json -Depth 5

Invoke-RestMethod -Method POST -Uri $URLsend -Headers $headers -Body $BodyJsonsend

}

if($Webhookdata -ne $null){

# Pick the webhook data in JSON format

$essentials = $Webhookdata.RequestBody | ConvertFrom-JSON

# Extract the relevant value of interest from JSON data

$AlertRule = $essentials.data.essentials.alertRule

$severity = $essentials.data.essentials.severity

$signalType = $essentials.data.essentials.signalType

$firedDateTime = $essentials.data.essentials.firedDateTime

$conditionType = $essentials.data.alertContext.conditionType

$condition = $essentials.data.alertContext.condition.allOf[0].searchQuery

$threshold = $essentials.data.alertContext.condition.AllOf[0].threshold

$Count = $essentials.data.alertContext.condition.AllOf[0].metricValue

$resultsUrl = $essentials.data.alertContext.condition.AllOf[0].linkToFilteredSearchResultsAPI

$header = @"

<style>

h1 { font-family: Arial, Helvetica, sans-serif; color: #e68a00; font-size: 28px; }

h2 { font-family: Arial, Helvetica, sans-serif; color: #000099; font-size: 16px; }

table { font-size: 12px; border: 2px; font-family: Arial, Helvetica, sans-serif; }

td { padding: 4px; margin: 0px; border: 1; }

th { background: #395870; background: linear-gradient(#49708f, #293f50); color: #fff; font-size: 11px; text-transform: uppercase; padding: 10px 15px; vertical-align: middle; }

tbody tr:nth-child(even) { background: #f0f0f2; }

</style>

"@

$results = Get-QueryResults -TenantId $tenantID -Credential $credential -queryUrl $resultsUrl

$resultsOutput = $(($results | Select-Object TimeGenerated, Computer, Account, AccountType, LogonType | ConvertTo-Html -As Table -fragment) -replace "\\", "/")

$mailbody = "$($header)Alert rule named $AlertRule fired since below $conditionType condition met $count times while threshold is $threshold <br><br> $condition <br><br> $resultsOutput <a href=$resultsUrl>Link to query results</a>"

$Subject = "$AlertRule - $severity - $signalType - $firedDateTime"

Send-CustomMail -TenantId $tenantID -subject $Subject -recipients $recipients -mailSender $mailSender -mailbody $mailbody -Credential $credential

}

Now your runbook is ready but one more thing to be done, add a webhook, open specific runbook and then click on add webhook. GIve a name, change expiry if needed and then note down the url. Don’t need to change parameters. Now you are ready to go back to action groups.

Step 8: Go to Alerts, then action groups and create an action group send-mail. Under actions, chose webhook, give a name and then put the url of webhook, which we noted down earlier and save.

Final: This should be all, you create an alert rule, which is supposed to be fired when condition met, it would trigger the runbook, which would send an email with the specified format as customized in the code.

Let me know what you think of the solution, what use cases you can think of the same and what improvements are possible.